Hello,

I segmented and created labels of the upper cervical cord on some 3D FLAIR images. Now I would like to compare the CSA of different groups of subjects. I was thinking that I should scale them somehow to avoid biases related to anatomical or fov factors ( like the scaling for the brain images that SIENAX does). Am I right or it is not necessary?

Is it possible to do this scaling registering the images to the template or there is another way?

Do you know if the CSA of the upper cervical cord given by the SCT segmentation of FLAIR images are reliable?

Thank you very much

Hi @Gianfranco,

I segmented and created labels of the upper cervical cord on some 3D FLAIR images. Now I would like to compare the CSA of different groups of subjects. I was thinking that I should scale them somehow to avoid biases related to anatomical or fov factors ( like the scaling for the brain images that SIENAX does). Am I right or it is not necessary?

CSA computation outputs values in mm2, using voxel dimensions from the NIFTI header, so it is independent of the FOV/matrix size used.

Do you know if the CSA of the upper cervical cord given by the SCT segmentation of FLAIR images are reliable?

If the segmentation is reliable, then yes, CSA computation is reliable. How is the segmentation on your images? If you run the QC on a few subjects and realize that minimum (or none) manual fix is necessary in your levels of interests, then I would call this reliable.

Best

Julien

Hi Julien,

I think that this question is connected with this one.

Briefly, when you use SIENAX, you rigidly register your brain to the MNI brain and then, from the diagonal of the transformation matrix, you obtain the scaling factor. Then, this scaling factor is giving how big or small is your brain regarding the standard brain. The numbers usually goes from 0.7 or 0.8 to 1.2-1.4. Then, it is also computed the Normalised Brain Volume, which basically is the tissue mak volumes resampled to MNI space. Look at pairreg and SIENAX script from FSL for details.

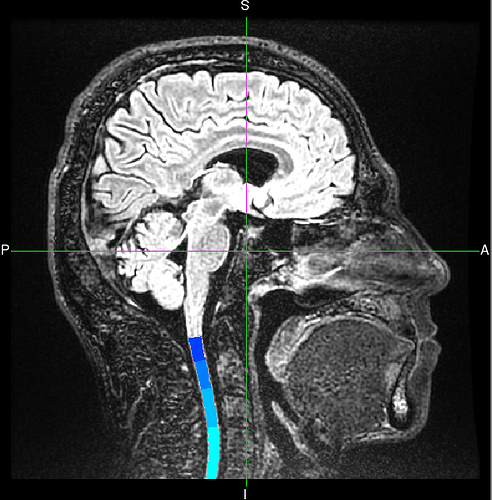

Ideally, using SCT, for obtaining a spinal cord scaling factor you need to straighten the cord and then register the straighten cord to the PAM template. But, this rigid registration is where we can have more challenges, because you could register the whole field-of-view or only the part that you are computing (upper cervical cord or whatever region).

Best

Ferran

Thank you for the context @fprados, yes, this makes more sense to me now. So, just to be clear: you meant affine transformation instead of rigid, right? Because the latter does not include scaling (only TxTyTzRxRyRz).

Indeed, a SIENAX-like approach for the cord seems tricky because it does rely on a few assumptions about how the scaling applies to the cord: on the axial plane only? if so, volumewise or slicewise? Before suggesting an approach, a study should focus on answering these questions, in order to figure out if this approach ends up being more efficient than a “simple” CSA calculation which ultimately gives you the same number (i.e. you get the scaling factor by comparing subject’s CSA with PAM50’s CSA).

Cheers,

Julien

Yes, SIENAX computes brain tissue volume, normalised for subject head size. In brief, the brain image is affine-registered to MNI152 space (using the skull image as reference to determine the registration scaling); this is primarily in order to obtain the volumetric scaling factor, to be used as a normalisation for head size.

Hence, if we register the cord (GM+WM) directly to the PAM50, we are not going to get the same effect because the cord is a soft tissue, and SIENAX idea relies in the skull/bones. If we do it with the soft tissues like GM+WM, indirectly we are computing atrophy, not cord size. Then, we would need to find another “static” reference. It is also important that for this reference we don’t use the element that we want to scale (i.e.: inner cord). I could suggest a few external landmarks such as disc level, outer CSF boundary, vertebrae, … Then, as first idea, I would compute this scaling factor per cord segment, using a straighten cord, and then you could do the mean of all the segments that you have. I think that the SCT pipeline that computes the registration between a cord and the PAM50 template could help.

@jcohenadad and @Gianfranco maybe we could set up a skype meeting and brainstorm about this.

Best

Ferran.

If we do it with the soft tissues like GM+WM, indirectly we are computing atrophy, not cord size.

I’m not sure I agree with this. “Atrophy” is simply the reduction in size (volume or CSA) of a given structure (e.g. spinal cord) across time. So, atrophy reflects the reduction of cord size. Now, when we compute CSA of the cord, we do measure cord size. The only difference with SIENAX, is that this cord size is absolute (e.g. in mm2) and not normalized with another structure (e.g. bony spine structure). Wether cord normalization is necessary (and how to do it) is still an open question, IMHO.

Then, we would need to find another “static” reference. It is also important that for this reference we don’t use the element that we want to scale (i.e.: inner cord). I could suggest a few external landmarks such as disc level,

this is already possible with SCT

outer CSF boundary, vertebrae

I can see many problems with using CSF boundary and/or vertebrae, but i’m happy to discuss it in a video. I suggest we make this discussion open to the community. Here is a doodle, everyone is welcome to drop their name. i’ll send a http://meet.jit.si/ link once we find a date.

We picked the final date June 5th at 12pm EST. Here is the link to the meeting: https://meet.jit.si/LoosePeppersFancyRecently. Looking forward to it!